In today's data-driven landscape, marketing and analytics teams are swimming in data from countless platforms: CRMs, ad networks, web analytics, and more. The real challenge isn't collecting this data; it's connecting it. Disjointed information leads to inaccurate attribution, wasted ad spend, and missed opportunities. True competitive advantage comes from creating a unified, reliable data ecosystem where insights flow freely between systems.

This article moves beyond generic advice to provide a definitive guide. We will explore eight critical data integration best practices that empower you to build robust, scalable, and secure data pipelines. By implementing these strategies, you can ensure your data is not just big, but also intelligent, accessible, and ready to drive growth.

You will learn how to:

For marketers looking to unify every touchpoint, platforms that offer one-click conversion syncs and server-side tracking exemplify how modern tools can simplify these complex integrations, ensuring data accuracy from the source to ad platforms. This guide provides the foundational principles needed to select and implement such solutions effectively, turning siloed data into your most valuable asset.

A robust data integration strategy is the blueprint for success, acting as the foundational layer upon which all integration activities are built. Without a clear plan, efforts become disjointed, leading to data silos, inconsistent quality, and misalignment with core business objectives. This practice involves defining clear goals, standards, ownership, and governance policies to ensure every integration effort adds strategic value.

Effective governance is not about restriction; it's about empowerment. It provides a structured framework that clarifies who owns what data, how it can be used, and the quality standards it must meet. By implementing policies for data access, security, and compliance from the outset, you create a trusted data ecosystem that supports reliable analytics and decision-making.

Establishing a formal strategy and governance framework is one of the most critical data integration best practices because it transforms data from a chaotic collection of assets into a managed, reliable resource. It directly addresses common failure points like poor data quality, security vulnerabilities, and compliance risks.

Companies that excel in this area see tangible benefits. For instance, Capital One's centralized data governance strategy reportedly reduced data integration time by 40% while improving compliance. Similarly, Walmart’s unified model for integrating data from over 11,000 stores enabled real-time inventory management, a massive competitive advantage.

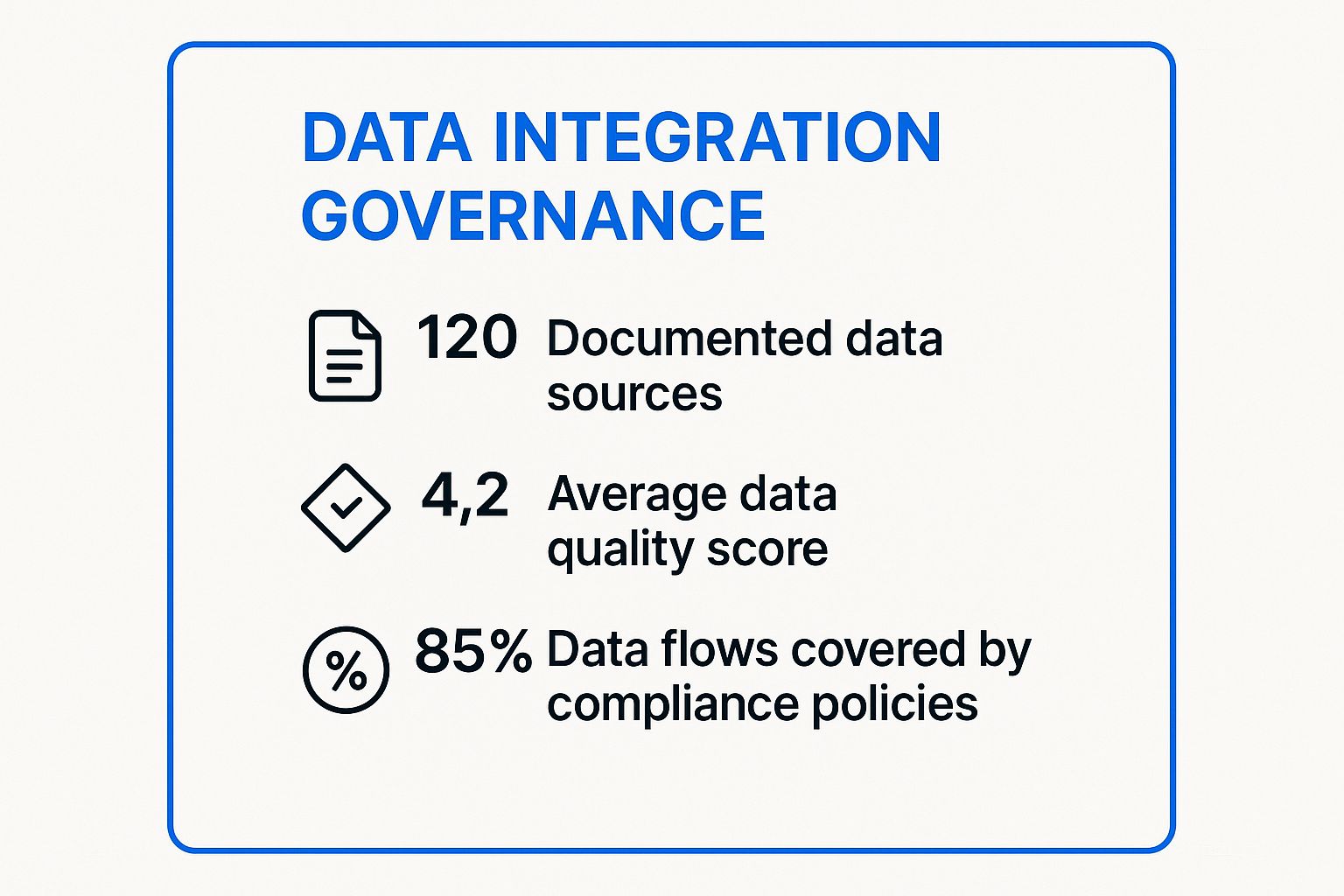

The following infographic highlights key metrics that a well-defined governance framework helps you track and improve.

These metrics provide a clear, quantitative view of your governance program's effectiveness, helping you pinpoint areas for improvement and demonstrate ROI. To learn more about how a structured approach can specifically benefit your marketing efforts, explore these best practices for marketing data integration.

An incremental and modular architecture breaks down massive data integration projects into small, manageable components. Instead of building a single, complex system, this approach involves creating loosely coupled, reusable integration modules that can be developed, tested, and deployed independently. This method allows organizations to deliver value faster, reduce project risk, and adapt quickly to changing business requirements.

This modern, agile-friendly strategy contrasts with traditional monolithic approaches, where a small change can require rebuilding and redeploying the entire system. By building a flexible infrastructure of independent services, you empower teams to innovate and scale their specific data pipelines without disrupting the broader ecosystem. This concept, popularized by thought leaders like Martin Fowler, is the backbone of microservices architecture.

Adopting a modular architecture is one of the most effective data integration best practices for achieving agility and scalability. It directly mitigates the risks associated with "big bang" deployments, where projects often fail due to overwhelming complexity, budget overruns, and shifting priorities. This incremental approach ensures a continuous delivery of value and allows for easier course correction.

Tech giants have proven the power of this model. Spotify’s data integration platform uses a modular, event-driven architecture that enables over 200 engineering teams to work independently. Similarly, Uber manages over 100 petabytes of data using reusable pipeline components, allowing for rapid development and deployment across various business units.

The video below offers a deeper dive into microservices, a key architectural pattern for building modular systems.

By building your integration framework piece by piece, you create a more resilient, flexible, and future-proof data ecosystem that can evolve alongside your business.

Treating data quality as an afterthought is a recipe for disaster. This best practice involves embedding comprehensive data quality checks at every single stage of the integration pipeline, from initial source extraction and transformation all the way to the final load into the destination system. It’s about building a culture of quality, not just performing a one-time cleanup.

This proactive approach involves establishing clear data quality rules, implementing automated validation processes, designing robust error handling mechanisms, and using data profiling to ensure only accurate, complete, and consistent data moves forward. The core principle is that data quality issues compound exponentially downstream; a small error at the source can corrupt entire datasets and lead to flawed business decisions.

Integrating data quality into the entire pipeline is one of the most crucial data integration best practices because it prevents the classic "garbage in, garbage out" problem. It ensures that the insights and analytics generated from integrated data are trustworthy, reliable, and actionable. Ignoring this leads to a lack of trust in data, wasted engineering resources on remediation, and costly business errors.

Leading companies demonstrate the immense value of this focus. PayPal, for example, implements over 50 distinct data quality checks in its payment processing pipeline, which helps catch 99.97% of data errors before they can impact customer transactions. Similarly, JPMorgan Chase employs real-time data quality monitoring for its financial transactions, running validation rules across billions of records daily to ensure accuracy and compliance.

By embedding these checks, you build a resilient system that self-corrects and maintains a high standard of data integrity. This is particularly vital for advertising platforms, where data accuracy directly impacts campaign performance. To see how this applies to ad data, you can learn more about enhancing your Meta Event Match Quality.

Effective data integration is impossible without understanding the data itself. Metadata, or "data about data," provides the context needed to make sense of your assets. Comprehensive metadata management involves systematically capturing information about data sources, transformations, and business rules, while data lineage specifically documents the journey of data from its origin to its final destination. Together, they create a transparent, auditable, and trustworthy data ecosystem.

This practice serves as the essential documentation backbone for your entire integration architecture. It clarifies what data means, where it came from, and how it has changed. By investing in robust metadata management and lineage tracking, you empower teams to find, understand, and trust the data they use, which is critical for accurate reporting and analytics.

Implementing metadata and lineage is one of the most impactful data integration best practices because it addresses the root causes of data misuse and mistrust. It provides answers to critical questions like "Where did this number come from?" and "What will break if I change this field?" This transparency is crucial for troubleshooting, impact analysis, and meeting stringent regulatory compliance requirements.

Organizations that master this see profound benefits. For instance, LinkedIn uses comprehensive metadata management to track lineage across over 100,000 datasets, allowing engineers to instantly understand dependencies. Similarly, Airbnb built a metadata platform called Dataportal that provides lineage for more than 50,000 datasets, reportedly reducing data discovery time by 80%.

By establishing a clear system for managing your data's context and history, you build a more resilient and reliable analytics foundation. To dive deeper into organizing your marketing data effectively, you can explore the principles of marketing data management.

Traditional batch processing, where data is moved in scheduled, bulk transfers, is no longer sufficient for many modern business needs. Adopting a real-time, event-driven approach means systems react to events as they happen, enabling immediate data availability and responsiveness. This architecture uses streaming platforms and message queues to capture, process, and distribute data changes the moment they occur, transforming operations from periodic updates to continuous intelligence.

This practice involves evaluating which business processes gain a competitive edge from timely data. While not every scenario needs millisecond latency, implementing streaming integration for critical functions like fraud detection, dynamic pricing, or real-time personalization creates significant, tangible value. It is about strategically applying the right integration pattern to the right business problem.

Moving to real-time integration is one of the most impactful data integration best practices because it directly enables proactive, data-driven decision-making. Instead of analyzing what happened yesterday, organizations can react to what is happening right now. This capability is crucial for enhancing customer experiences, mitigating risk, and optimizing operations in a fast-paced digital environment.

The benefits are clear across industries. For example, Bank of America implemented real-time fraud detection using event-driven integration, reportedly reducing fraud losses by 35%. Similarly, DoorDash leverages real-time event streaming to process millions of events per second, enabling live order tracking and dynamic delivery optimization, which are central to its business model.

Data integration pipelines are complex systems where failures are not just possible, but inevitable. A proactive approach involves designing for resilience by building robust error detection, handling, and recovery mechanisms directly into your workflows. This practice shifts the focus from hoping failures won't happen to ensuring that when they do, they are detected instantly, handled gracefully, and resolved with minimal business impact.

Effective error handling and monitoring go beyond simple pass-fail checks. It involves implementing detailed logging, automated alerts, retry logic for temporary issues, and fallback mechanisms for critical failures. By anticipating potential problems, you create a data integration environment that is both resilient and trustworthy, ensuring that downstream analytics and operations are not compromised by silent data corruption or pipeline outages.

Implementing comprehensive error handling and monitoring is one of the most vital data integration best practices because it directly addresses the operational reality of data pipelines. It transforms your integration process from a fragile, high-maintenance system into a self-healing, reliable one. This practice prevents minor glitches from cascading into catastrophic failures, saving countless hours in manual debugging and protecting business-critical decisions from being based on incomplete or erroneous data.

Companies that master this practice maintain exceptional service reliability. For instance, Stripe’s payment data integration achieves 99.999% reliability by using sophisticated error handling that automatically retries failed webhooks. Similarly, Netflix’s Chaos Engineering principles ensure their data pipelines can automatically recover from major infrastructure failures, maintaining a seamless user experience. Shopify monitors over 50,000 metrics across its platform, a strategy that helps them detect and resolve 90% of issues before they impact customers.

Integrating security, privacy, and compliance into your data integration process from day one is non-negotiable. This "security by design" approach treats these elements as core architectural requirements, not as features to be bolted on later. It involves systematically addressing potential vulnerabilities by embedding controls like data encryption, access management, and audit logging directly into the integration framework.

This practice recognizes that moving data between systems, especially across networks, inherently creates risks. By proactively designing for compliance with regulations like GDPR, HIPAA, and SOX, you build a resilient and trustworthy data pipeline. This means every data flow is designed to protect sensitive information, ensuring that security and privacy are maintained throughout the data lifecycle.

Embedding security and compliance by design is one of the most vital data integration best practices because it fundamentally mitigates risk and builds trust. A reactive approach to security often leads to costly breaches, reputational damage, and regulatory fines. By being proactive, you prevent vulnerabilities before they can be exploited, making your data ecosystem safer and more reliable.

Leading companies demonstrate the power of this approach. For example, Salesforce’s multi-tenant security architecture ensures strict data isolation and privacy for each customer during integration. Similarly, HSBC uses field-level encryption and tokenization within its data integration workflows to comply with stringent banking regulations across more than 60 countries, safeguarding sensitive financial data.

By building security into the foundation of your data pipelines, you not only protect your assets but also enhance data utility for analytics and decision-making. To see how these principles apply in modern marketing analytics, discover the benefits of a secure, compliant approach to server-side tracking.

Adopting automation and applying DevOps principles to data management, a practice known as DataOps, fundamentally changes how integration pipelines are built, tested, and deployed. This approach treats data pipelines as software, using continuous integration/continuous deployment (CI/CD) to automate the entire lifecycle. It moves data integration from a manual, error-prone process to a streamlined, reliable, and agile system.

By implementing DataOps, organizations use version control for integration logic, automated testing to catch errors early, and infrastructure as code to ensure environmental consistency. This methodology minimizes human error, improves collaboration between data engineers and analysts, and drastically accelerates the delivery of high-quality, trusted data to business users.

Leveraging DataOps is a critical data integration best practice because it introduces engineering discipline into data analytics, directly addressing speed, quality, and reliability. It enables organizations to respond rapidly to changing business needs without sacrificing the integrity of their data pipelines, a common trade-off in traditional data management.

The results are transformative. ING Bank, for example, implemented DataOps practices and cut their data pipeline development cycle from six weeks down to just two, while simultaneously reducing production issues by 70%. Similarly, Spotify’s data platform team uses automated DataOps pipelines to empower over 200 data engineers, reducing deployment times from weeks to mere hours and fostering rapid innovation.

By automating these processes, you can significantly enhance efficiency and focus on higher-value activities. To understand how automation can impact other areas, explore these insights on how automation can streamline your marketing efforts.

Navigating the complexities of modern marketing and analytics requires more than just connecting a few applications. It demands a strategic, disciplined approach to data integration. Throughout this guide, we've explored the foundational pillars that separate fragile, error-prone data pipelines from the resilient, high-performing systems that drive business growth. Moving from siloed data to a unified, trustworthy ecosystem is not a single project with a finish line; it is an ongoing commitment to excellence and a core component of a data-driven culture.

The journey begins with a solid foundation. As we discussed, establishing a clear data integration strategy and governance framework is non-negotiable. This blueprint dictates how data flows, who owns it, and how it's used, preventing the disorganized "data swamp" that plagues so many organizations. Paired with an incremental and modular integration architecture, you gain the agility to adapt and scale without having to rebuild your entire system every time a new tool is introduced or a business requirement changes.

True mastery of these concepts hinges on recognizing that technology is only part of the equation. The most crucial data integration best practices revolve around process and quality.

Ultimately, these practices work in concert to build a system that is secure, compliant, and efficient. By embedding security and privacy by design, you protect your most valuable asset-your customer data-and maintain brand trust. Adopting real-time or event-driven integration where it matters most, such as for personalization or fraud detection, ensures your business can react to opportunities and threats as they happen, not hours or days later.

The overarching goal is to elevate your data infrastructure from a mere operational necessity to a strategic advantage. When your data is clean, timely, and accessible, you empower your teams to move beyond guesswork and make confident, data-backed decisions that optimize ad spend, improve customer experiences, and accelerate growth. This transformation from data chaos to clarity is the ultimate ROI of implementing robust data integration best practices.

Ready to implement these best practices without the heavy lifting? Cometly is a purpose-built attribution and marketing data integration platform that handles the complexity for you, with over 100 zero-code integrations. Focus on your strategy and let us ensure your data is accurate, reliable, and ready to fuel your growth. Explore how Cometly can unify your marketing data and unlock its true potential today.

Learn how Cometly can help you pinpoint channels driving revenue.

.svg)

Network with the top performance marketers in the industry