A solid conversion optimization strategy isn't about guesswork. It's a methodical process for getting a higher percentage of your website visitors to take a specific action, whether that's making a purchase or booking a demo. It’s all about using data-driven analysis, smart testing, and real user behavior insights to systematically improve the customer experience and, of course, boost your bottom line.

So many businesses get stuck in the "more traffic" trap. They pour money into ads, thinking a flood of new visitors will magically lead to more sales. While traffic is obviously a prerequisite, the real key to sustainable growth isn't just getting more people to your site—it's about what you do with them once they arrive.

This is where a true conversion optimization strategy comes in.

It’s not about randomly testing button colors or making changes based on a gut feeling. A real strategy is a disciplined, repeatable framework for understanding why users behave the way they do and methodically removing any friction that gets in their way. This approach makes sure every change you make is purposeful and, more importantly, measurable.

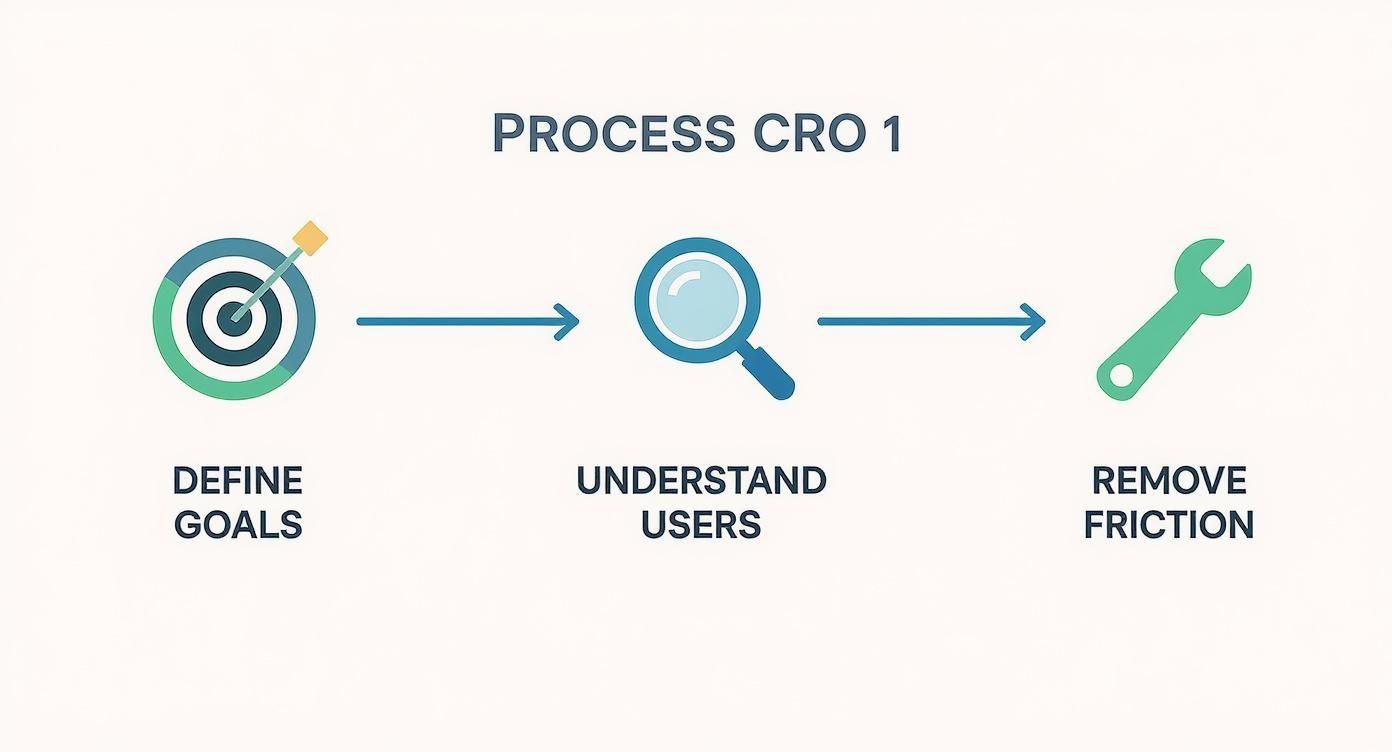

This visual breaks down the core CRO process: it all starts with defining clear goals, then moves to deeply understanding your users, and finally, removing the obstacles that stop them from converting.

As you can see, each step builds on the last, creating a continuous loop of improvement that lifts the entire customer journey.

The foundation of any good plan is setting clear, measurable goals. What action actually counts as a "win" for your business? Vague targets like "increase sales" just won't cut it—they aren't actionable. You need specific goals that tie directly back to your bigger business objectives.

Here are a few examples of what solid goals look like:

Each of these gives you a clear benchmark for success and keeps your optimization efforts focused where they'll make the biggest difference. If you're running an e-commerce store, this ultimate Shopify conversion rate optimization guide is a fantastic resource for diving deeper.

It’s so important to focus on a holistic strategy rather than just isolated tactics. Sure, a single A/B test might give you a small lift, but a well-designed strategy creates compounding returns over time. It requires a complete mindset shift—from simply buying traffic to optimizing the entire path a user takes from their first click to their final purchase. To learn more about this, check out our complete guide to customer journey optimization.

By adopting a strategic framework, you begin to build a repeatable system that not only boosts conversion rates but also elevates the overall customer experience, turning curious browsers into loyal advocates.

Before you can start plugging the leaks in your conversion funnel, you have to find them first. This isn't about guesswork; it's a systematic audit to uncover exactly where you’re losing potential customers. A solid audit is the bedrock of any successful conversion optimization strategy.

Your goal here is to shift from a vague feeling that "conversions could be better" to holding a concrete, prioritized list of problem areas. Are people abandoning their carts right before paying? Is a key landing page sending them running? The answers are sitting right there in your data.

The first part of your audit is all about the numbers. This is where you jump into your analytics tools to get a clear, objective look at what users are actually doing. You're hunting for significant drop-off points that scream "problem here!"

Start by mapping out the key stages of your funnel. For an e-commerce store, it might look something like this:

With this map in hand, you can use your analytics to see the percentage of users who successfully move from one stage to the next. Our guide on conversion funnel analytics offers a deeper dive into setting this up. A steep drop-off between "Initiate Checkout" and "Complete Purchase," for example, is a massive clue telling you to focus all your energy on the checkout process itself.

Your analytics platform is your treasure map. It doesn’t just show you where users are going; it highlights the exact spots where they’re getting lost, giving you a clear starting point for your investigation.

Numbers tell you what is happening, but they rarely explain why. This is where qualitative tools come into play, adding that crucial human context to your audit. These tools help you understand the real experience behind the data points.

Combining these two types of data gives you a powerful, complete picture. For instance, analytics might show a high bounce rate on a landing page. A session recording could then reveal that the page loads so slowly on mobile that users are bailing before it even renders. As you audit, you'll likely spot some duds; learning how to optimize landing pages for conversions can turn these weak links into your best assets.

It's also incredibly helpful to know how your conversion rates stack up against industry averages. This gives you context and helps set realistic goals. Performance can vary wildly by industry and traffic source, which is why a one-size-fits-all strategy just doesn't work.

A comprehensive study by Blogging Wizard found that while the average conversion rate across all industries hovers around 2.9%, this number changes dramatically depending on the channel.

To give you a clearer picture, here’s a breakdown of average conversion rates by some common traffic sources.

This table breaks down average conversion rates across different traffic sources, helping you benchmark your own performance and identify high-potential channels for optimization.

This data shows that a "good" conversion rate isn't some universal number; it’s completely relative to your specific market and how you’re bringing customers in the door.

By the time you finish your audit, you should have a clear, prioritized list of friction points. You’ll know which pages are underperforming, which forms are causing headaches, and where your funnel is leaking the most revenue. This document becomes your roadmap, guiding every single hypothesis you'll test next.

Your audit has dug up the problems; now it's time to brainstorm the solutions. This is where a data-backed idea becomes a testable hypothesis, the very thing that separates professional CRO from amateur guesswork.

A strong hypothesis is the scientific core of your conversion optimization strategy. It ensures every test is purposeful. Without one, you're just throwing changes at the wall to see what sticks—a recipe for wasted time and inconclusive results. A well-crafted hypothesis, on the other hand, turns a vague hunch into a clear, measurable experiment designed to deliver specific outcomes.

The best way I’ve found to structure ideas is with the "If-Then-Because" framework. It's a simple format that forces you to connect a proposed change directly to an expected outcome and—most importantly—justify it with a reason rooted in your audit data.

It looks like this: If we change [X], then [Y] will happen, because [Z].

Let's break that down:

Instead of a generic goal like "improve the CTA button," this framework forces you to be specific and strategic.

A strong hypothesis is a statement of predicted cause and effect. It clarifies what you're testing, what you expect to happen, and why. This turns every experiment into a learning opportunity, regardless of whether you win or lose.

Let’s see how this works in a real-world scenario. Imagine your funnel audit revealed a high bounce rate on a landing page. Session recordings show users hovering over the main call-to-action button, which reads "Learn More," but not clicking.

Here’s how you could build a hypothesis around that insight:

See the difference? The second version is powerful because it's specific, measurable, and directly tackles the user friction (uncertainty) you identified. It also clearly defines the success metric for the test, which is absolutely critical when you start analyzing results and determining what is statistical significance and why it matters.

Once you have a list of strong hypotheses, you’ll face a new challenge: which one to test first? You can't test everything at once, so a simple prioritization model is key to focusing your efforts where they'll deliver the biggest return.

A popular and effective framework for this is PIE, which stands for Potential, Importance, and Ease.

Score each hypothesis on a scale of 1-10 for each of these three criteria, and you can quickly rank your ideas. The tests with the highest PIE scores should jump to the top of your roadmap. This structured approach keeps your CRO program efficient and laser-focused on results.

You’ve done the hard work of digging through the data, auditing your funnel, and building a solid list of hypotheses. Now for the fun part: putting those ideas to the test and seeing how they hold up against real user behavior.

This is where the rubber meets the road in any serious conversion optimization strategy. Controlled experiments are your engine for growth, allowing you to move from educated guesses to data-backed wins.

But this isn’t about just throwing a test live and crossing your fingers. A disciplined approach is what separates the pros from the amateurs, ensuring your results are clean, statistically significant, and actually reflect what your customers want.

First things first, you need the right platform to run your experiments. The market is full of options, from free tools that are perfect for getting started to powerful platforms built for enterprise-level testing.

The right tool really depends on your budget, traffic volume, and technical chops. The most important thing is to pick one that lets you easily set up variations, split your traffic cleanly, and accurately track the conversion goals you care about.

One of the biggest mistakes I see people make in CRO is calling a test too early. You might see one variation jump ahead after a day or two and get excited, but that's often just random noise. Don't fall for it.

For a decision to be reliable, your test must reach statistical significance.

This fancy term just means there's a very low probability that the result you're seeing happened by pure chance. Most A/B testing tools aim for a 95% confidence level, which lets you be 95% sure that the winner is truly the better version. Waiting for your test to hit this threshold is non-negotiable. If you don't, you could end up rolling out a "winner" that has zero impact—or even hurts your conversions.

A few common slip-ups can completely invalidate your test results, wasting all the effort you've put in. Keeping your experiments clean is crucial for maintaining the integrity of your whole optimization program.

Here are a few critical mistakes to steer clear of:

A failed test isn't a waste of time; it's a learning opportunity. When a hypothesis is proven wrong, the data still tells you something incredibly valuable about what motivates (or doesn't motivate) your customers. That insight fuels your next, smarter hypothesis.

The real magic happens when you dig into the analysis. A test result isn't just a binary "win" or "loss"—it's a goldmine of customer insight.

So your new, emotional, benefit-driven headline lost to the old, boring one. What does that tell you? Maybe your audience is more technical and responds better to specs than to emotional appeals. That's a huge insight.

This is where integrating your testing platform with a tool like Cometly becomes a game-changer. By connecting your experiment data with deep attribution, you can see not just which version won, but who it won with.

Maybe the new design crushed it with mobile users from paid search but flopped with desktop users from organic. These are the kinds of granular insights that turn a good CRO program into a great one. You can learn more about this in our guide to conversion analytics.

Small, focused changes can have a massive ripple effect. The table below shows just how much impact specific optimizations can have on overall conversion rates.

Data sourced from a Market.us study on CRO statistics.

As the data shows, a methodical testing process focused on removing friction and improving user experience directly translates to significant gains.

Ultimately, every experiment—win or lose—sharpens your understanding of the customer. That knowledge becomes the bedrock for your next round of hypotheses, creating a powerful, continuous loop of improvement that drives real growth.

A single successful A/B test feels great, but it’s just one battle. Winning the war means turning those individual victories into a powerful, self-sustaining growth engine. The real edge comes from building a system where every experiment—win or lose—makes your entire marketing operation smarter.

This is all about creating a continuous feedback loop. You run a test, learn something new about your customers, and then apply that knowledge everywhere you can. It’s how you go from making small tweaks on a single page to lifting the performance of your entire funnel.

First things first: stop treating test results like disposable data. Every single experiment you run generates a valuable nugget of insight into customer behavior, and that knowledge needs to be captured and organized. A centralized knowledge base is non-negotiable here.

This doesn't have to be complicated. It could be a shared spreadsheet or a dedicated project management tool like Asana or Monday.com. The key is to create a single source of truth that documents every test you've run.

For each experiment, your log should include:

This document becomes your optimization program's memory. It stops you from repeating failed tests and ensures that institutional knowledge doesn't walk out the door when a team member leaves.

A well-maintained knowledge base transforms your CRO efforts from a series of disconnected tactics into a strategic library of customer insights. It ensures every dollar you spend on testing delivers long-term value, even when an individual test fails.

Once you’ve validated a winning concept, the real scaling begins. A successful test on one landing page is almost always a signal of a broader customer preference. This is where you get those exponential returns on your optimization efforts.

Did changing your CTA button from "Submit" to "Get Your Free Quote" boost conversions by 25%? Awesome. But don’t just celebrate the win on that one page. That learning—that your users prefer specific, value-driven language—is a powerful insight.

Now, roll it out everywhere:

By applying this single validated insight across multiple touchpoints, you amplify the impact of your original test. What started as a small lift on one page can quickly become a significant boost to your overall conversion rate.

Ultimately, the most successful companies don't just do CRO; they live and breathe it. They build a culture of testing where optimization isn't a side project owned by one person but a core function baked into every relevant department.

Fostering this culture means encouraging curiosity and challenging assumptions with data. When the product team wants to launch a new feature, the first question should be, "How can we test its impact on user engagement?" When the marketing team designs a new campaign, they should already be planning the A/B tests for the landing pages.

This cultural shift requires buy-in from leadership and empowers teams to experiment without the fear of failure. It frames every marketing activity as a chance to learn and get better.

It’s also crucial to understand where you stand in your industry to set realistic goals. Benchmarks show that CRO success varies wildly between sectors. Data from Lead Forensics reveals that B2B conversion rates can range from just 1.1% for SaaS companies to a high of 7.4% in legal services. These numbers often blend major conversions like sales with smaller ones like content downloads, which is why a sector-specific approach is so important. You can dig into more of these B2B conversion rate statistics on LeadForensics.com to see how you stack up.

By documenting your learnings, applying them broadly, and embedding experimentation into your company's DNA, you transform CRO from a series of one-off projects into a powerful, ever-improving system for growth.

Even with a great framework in hand, getting into the weeds of a conversion optimization strategy always brings up a few questions. Let's get them out of the way now so you can keep your program moving forward with confidence.

These are the practical hurdles that pop up for just about everyone, from tiny startups running on fumes to huge companies trying to build a real testing culture. Nail these answers, and you’ll build momentum much faster.

This is easily the most common question I hear, and the right answer is almost never "one week." The perfect test duration has nothing to do with a fixed calendar date; it's all about reaching statistical significance. You need to run a test long enough to be confident your results aren't just a fluke.

A few things will influence how long that takes:

As a rule of thumb, shoot for at least 100-200 conversions per variation. It's also a good idea to run the test for at least one full business cycle—usually one to two weeks—to iron out any weird daily spikes or dips in user behavior.

Look, not every test is going to give you a clear winner. Sometimes, the results come back totally flat, with no statistically significant difference between your original and the new version. This isn't a failure—it's a learning opportunity.

An inconclusive result tells you one thing very clearly: the element you changed didn't really matter to your users. That's incredibly valuable information. It stops you from pushing a change live based on a gut feeling and helps you zero in on what your audience actually cares about.

When a test comes back inconclusive, just document what you learned and move on to the next hypothesis on your list.

Don't ever think of an inconclusive test as a waste of time. It's a data point that proves your original hypothesis was wrong, saving you from making a pointless change and freeing you up to focus on ideas with real potential.

You really don't need a massive, expensive tech stack to get an effective conversion optimization strategy off the ground. For most small and medium-sized businesses, just a few core tools will get you everywhere you need to go.

Here’s your essential CRO starter pack:

Start with these three, and you'll have everything you need to audit your site, build solid hypotheses, and validate them with real data. You can always explore more advanced platforms as your program grows. For a deeper look at effective tactics, our guide covers many essential conversion rate optimization best practices to get you going.

Getting the green light from leadership can be a huge challenge, especially in companies that aren't used to a data-first approach. The secret is to frame your entire conversion optimization strategy in the one language every leader understands: revenue and ROI.

Stop talking about "button colors" or "headline tweaks." Start talking about financial impact.

Use the data from your audit to put a number on the problem. For instance, instead of saying the checkout is confusing, say this: "Our checkout page has a 40% abandonment rate, which we estimate is costing us $50,000 in lost revenue every month. My hypothesis is that by simplifying the form, we can recover 10% of that."

This approach ties your testing efforts directly to the bottom line. Suddenly, CRO isn't just a "marketing thing"—it's a clear business priority.

Ready to connect your optimization efforts to real revenue? Cometly provides the clear attribution data you need to prove what's working and scale your wins with confidence. See how our platform can supercharge your conversion optimization strategy.

Learn how Cometly can help you pinpoint channels driving revenue.

.svg)

Network with the top performance marketers in the industry