Site conversion optimization is all about turning more of your website visitors into customers. It's not about chasing more traffic; it’s about getting more value from the traffic you already have. The goal is to figure out what makes your users tick, remove any friction points, and make it incredibly easy for them to say "yes" to your offer.

Before you start changing headlines or button colors, you need data you can actually trust. Guesswork is the absolute enemy of effective conversion optimization. Making changes based on a hunch or incomplete analytics is like trying to navigate a maze blindfolded—you’ll waste a ton of time and probably end up right back where you started.

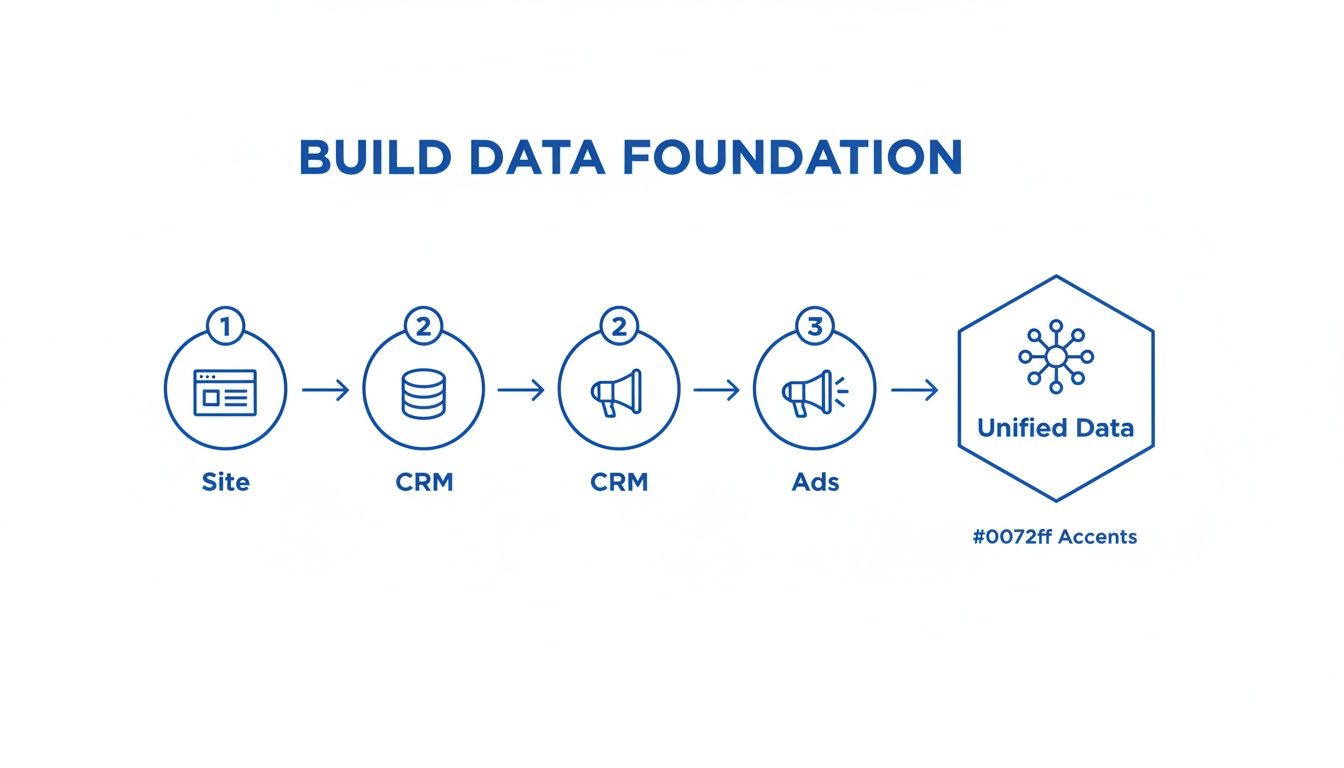

The first step is always to build an analytics framework that gives you the full picture of the customer journey, from the first ad they saw to the moment they purchased. This means digging deeper than surface-level metrics like page views and bounce rates. Real insight comes from stitching together data from all your different platforms into one unified source of truth.

To really understand what’s happening, you have to connect the dots between what users do on your site, what their history looks like in your CRM, and how they’re interacting with your ad campaigns. When these systems are siloed, you’re only seeing fragments of the story.

For example, your ad platform might happily report a conversion, but it has no idea that the same customer requested a refund a week later. That critical piece of information lives in your CRM, and without connecting it, you might keep pouring money into a campaign that looks profitable but is actually driving low-quality customers.

This is where you bring everything together into a central hub.

By consolidating this information, you eliminate the data gaps and get a clear, holistic view of performance. This allows you to accurately map the customer’s entire path and finally understand what truly drives revenue.

One of the biggest headaches in digital marketing today is unreliable browser tracking. Ad blockers, privacy updates like iOS 14, and cookie restrictions mean a huge chunk of your data never even makes it to your analytics tools. This leads to missing conversions and misattributed sales, completely skewing your perception of what's working.

Server-side tracking is the solution. Instead of relying on the user's browser (client-side) to send data, this method sends conversion events directly from your server to your analytics and ad platforms. It’s far more accurate and bypasses most of the issues that plague browser tracking, ensuring you capture the real story.

Platforms like Cometly are built for this, offering a zero-code setup to make sure your tracking is airtight. This is the kind of data clarity that lets you invest your marketing budget with confidence. It's no wonder the global CRO software market is projected to hit USD 1.93 billion by 2026, with companies reporting an average ROI of 223% on their optimization efforts.

By implementing a robust tracking system, you create a foundation for a data-driven culture. It’s not just about collecting data; it's about collecting the right data to make smart, impactful optimization decisions that grow your bottom line.

Hardly anyone converts on their first visit. The typical customer journey is messy—they might see a TikTok ad, later click a Google search result, open an email a week later, and then finally type your URL directly into their browser to buy.

A last-click attribution model would give 100% of the credit to that final direct visit, completely ignoring the crucial roles the TikTok ad and Google search played in getting them there. This is a recipe for bad decisions, as you'd likely cut the budget for the very channels that introduced them to your brand in the first place.

Multi-touch attribution solves this by assigning value to each interaction along the customer’s path. It gives you a much more nuanced understanding of how your marketing channels work together to drive sales. You can finally see which campaigns are great for awareness, which ones are best for consideration, and which ones close the deal.

To properly analyze this complex journey, you need to be tracking a variety of data points.

Tracking the right metrics is the difference between guessing and knowing. The table below breaks down the essential data points you should be collecting to get a clear view of your conversion funnel and user behavior.

By focusing on these key metrics, you move beyond vanity numbers and start gathering the insights needed for meaningful optimization. Understanding how to collect and interpret this information is the core of making your data actionable and turning insights into revenue.

No matter how slick your website is, it has leaks. These are the hidden friction points—the confusing forms, the broken links, the distracting pop-ups—where potential customers give up and walk away. Real site conversion optimization isn’t about guesswork; it’s about becoming a data detective to find and plug these leaks before they quietly drain your revenue. The whole process starts by digging deeper than surface-level metrics and blending two powerful types of data.

First up is quantitative data. This is the hard evidence—the "what" and "where"—that lives inside your analytics platform. It tells you which pages have sky-high exit rates or where users are dropping off in your funnel. For an e-commerce brand, this might be a checkout page where 70% of users are abandoning their carts. For a SaaS business, it could be the pricing page that almost nobody clicks through from.

This data is great for pointing you to the scene of the crime, but it won’t tell you why the crime happened. That’s where the other half of the story comes in.

To really understand what’s driving your users to leave, you need qualitative insights. These tools let you see your website through your customers’ eyes, revealing the human behavior and frustrations behind the cold, hard numbers. Instead of just knowing people are bailing, you get to see the struggle right before they click away.

Here are the essential qualitative tools for your detective kit:

By blending quantitative reports with qualitative observations, you move from guesswork to evidence-backed problem identification. You’re not just saying, "Our cart abandonment is high"; you're saying, "Our cart abandonment is high because the promo code field is confusing, and users think they need a code to proceed."

Once you’ve found a leak, the next step is to frame it as a clear problem statement. A solid problem statement pinpoints the specific issue and who it affects, which sets you up to create a strong, testable hypothesis down the line. It’s the bridge between diagnosis and treatment.

For example, let's say your session recordings show mobile users fumbling to fill out your checkout form.

This level of clarity is absolutely essential for a successful site conversion optimization strategy. It makes sure you're solving real user problems, not just chasing vanity metrics. You can learn more about turning these findings into strategy by exploring guides on revenue analytics.

You're probably going to uncover dozens of potential leaks. If you try to fix them all at once, you’ll get nowhere fast. The key is to prioritize based on potential impact and the level of effort required. For each problem you find, ask yourself two simple questions:

Focus your energy on the problems that hit the most people at the most critical stages of the funnel. This disciplined approach ensures your optimization efforts go where they can make the biggest difference to your bottom line, turning your diagnostic work into measurable growth.

So, you’ve dug through the data and pinpointed where you’re bleeding revenue. Great. Now you’re probably looking at a long list of potential fixes, and this is where a lot of teams get stuck. The next move is what separates strategic site conversion optimization from just throwing changes at the wall and hoping something sticks. It's time to turn those raw insights into structured, testable ideas.

A vague thought like "make the CTA button better" is completely useless for testing. A strong hypothesis, on the other hand, is a specific, measurable statement that lays out the proposed change, the outcome you expect, and why you expect it. This structure forces you to think critically and ensures every experiment is actually rooted in the data you just spent hours analyzing.

For example, if your heatmaps show users are totally ignoring your product page CTA, a solid hypothesis isn't just a guess. It's a calculated prediction: "By changing our product page CTA from 'Learn More' to 'Add to Cart Now,' we will increase cart additions by 10% because the new copy creates a clearer, more direct action for users who are ready to buy."

A truly effective hypothesis has three core parts. It’s an educated prediction based on the evidence you've already collected, and getting this right is fundamental to building an effective testing program.

Here’s a simple framework I use:

This disciplined approach transforms your diagnostic work into a series of clear, actionable experiments. Every single test becomes a lesson, whether you win or lose, giving you valuable insight into what makes your audience tick.

Once you have a backlog of strong hypotheses, the next challenge is deciding what to test first. You can’t do everything at once, so you need a system to prioritize ideas based on their real potential to move the needle.

A classic mistake is gravitating toward the easy changes, like tweaking button colors. While simple, these tests often have a minimal impact. A prioritization framework forces you to focus on changes that actually matter to your bottom line.

A simple yet incredibly effective model for this is the PIE framework. It helps you score each hypothesis against three key criteria, giving you a structured way to build your testing roadmap.

The PIE framework assesses each idea on a scale of 1 to 10 for:

By scoring each hypothesis and adding up the numbers, you can quickly see which high-impact, high-importance ideas are also feasible to execute right now. For a deeper look at identifying the most valuable user actions, check out our guide on conversion funnel analytics.

This structured approach makes sure you invest your time and resources where they’ll generate the biggest return. Once you have a solid data foundation, the next step is to strategize on how to improve ecommerce conversion rates by tackling the most promising opportunities first.

Alright, you've pinpointed the leaks in your funnel and cooked up some solid hypotheses about what might fix them. This is the moment of truth. It's time to move your ideas from a spreadsheet into the real world and run controlled experiments to get clear, data-backed answers on what actually improves performance.

The two main ways to do this are A/B testing and its more complex cousin, multivariate testing.

Most people start with A/B testing. It's straightforward: you pit two versions of a page or an element against each other—the original (Version A) and your new idea (Version B)—to see which one comes out on top. Think testing a red "Buy Now" button against a green one to see which color gets more clicks. Simple, clean, and effective.

Then you have multivariate testing. This is where you test multiple changes at the same time to find the best-performing combination of elements. For example, you could test two different headlines, two different hero images, and two different CTAs all at once. The goal isn't just to find the best headline, but to discover the ultimate winning recipe from all possible combinations.

So, A/B or multivariate? The right choice really hinges on your specific goals, how much traffic you're working with, and how complex your hypothesis is.

A/B tests are perfect for getting quick, decisive answers on isolated changes. Because they're simpler, they don't need a massive amount of traffic to reach statistical significance. On the other hand, multivariate tests are built for bigger projects, like a complete page redesign, but they demand a ton of traffic to deliver reliable results.

Here’s a quick comparison to help you choose the right experimental approach for your hypothesis.

For most teams just kicking off their site conversion optimization efforts, A/B testing is the way to go. It's less complicated and gives you actionable insights much faster.

Just launching a test isn't enough—several common mistakes can completely invalidate your results and send you down the wrong path. One of the biggest blunders I see is ending a test too soon. It's tempting to call it when one version pulls ahead after a few days, but that early lead is often just random noise.

A test needs to run long enough to collect a sufficient sample size for each variation. This ensures that the results are statistically significant and not just a product of chance. Rushing to a conclusion is a surefire way to implement a false positive.

Here are a few other critical mistakes to watch out for:

Making sure your test results are statistically sound is non-negotiable. You can learn more about how to do this by reading our guide on what is statistical significance and why it's so critical for your experiments.

Once your test has run its course, it's time to dig into the results. Your first goal is to determine a clear winner. But more importantly, you need to understand why it won. Did the new headline connect better with your audience's pain points? Did that simplified form really reduce friction? These are the insights that make you smarter.

And remember, a "failed" test is never a true failure. If your variation doesn't beat the control, it's still an incredibly valuable learning opportunity. It tells you your hypothesis was off the mark, which helps you refine your understanding of your customers. Every outcome, win or lose, fuels a better, more informed experiment the next time around.

This creates the powerful feedback loop of continuous improvement that sits at the very heart of site conversion optimization. Don't forget that fundamental site performance also plays a huge role. Data shows that a single second delay in page load time can reduce conversions by 7%. In fact, sites that load in one second have conversion rates three times higher than those loading in five seconds. Discover more insights about ecommerce conversion rates on redstagfulfillment.com. This means even the best-designed experiment can be undermined by poor technical performance.

It’s an incredible feeling to see an A/B test come in with a clear winner. But let’s be honest—that win is completely worthless if you don’t act on it. Discovering that a new headline lifts conversions by 15% is just an interesting data point until you roll it out to 100% of your audience.

This is the final, critical stage where site conversion optimization stops being a series of one-off projects and becomes the engine for real, sustainable growth. It’s all about taking what you’ve learned and making it a permanent part of your user experience.

First things first: you have to actually implement the winning variation. This sounds simple, but it demands careful coordination between your marketing and development teams. You need to be sure the changes are deployed accurately and that your analytics tools are set up to track the post-implementation impact. The goal here is to confirm that the lift you saw in a controlled test translates to the real world.

Once the winning change is live, your job isn't over. Not even close. A smart optimization program doesn't just celebrate one win; it scales the learnings from that win across the entire business.

Let’s say a test on one product page revealed that adding customer testimonials just below the "Add to Cart" button dramatically increased trust and conversions. That insight shouldn't live and die on a single page.

This is your chance to apply that learning everywhere it makes sense.

This approach creates a ripple effect, multiplying the value of a single experiment. You’re not just optimizing a page; you're evolving your entire marketing playbook based on proven, data-driven insights about what motivates your customers.

Now we get to the real power of an integrated system. This is where you create a cycle of continuous improvement that directly slashes your customer acquisition costs. Modern marketing demands that your on-site optimizations and your ad platforms are in constant communication. When they're disconnected, you're leaving a massive amount of efficiency on the table.

Think about it. Your A/B test might prove that a specific landing page variation is a conversion machine. But ad platforms like Facebook and Google are still blindly sending traffic to both the old and new pages, learning slowly from their own limited data. They have no direct insight into your test results.

This disconnect is exactly the problem that conversion sync tools were built to solve. Instead of letting ad platform algorithms guess, these tools act as a direct line of communication, feeding them accurate, server-side conversion data in near real-time.

Platforms like Cometly take this a step further. When you find a winning variation, you can ensure that only the conversion data from that winning page is sent back to Facebook or Google. This immediately trains their algorithms on what's working best.

Here’s the practical impact:

This intelligent feedback loop transforms your site conversion optimization efforts from a standalone activity into the engine that powers your entire customer acquisition strategy. It’s how you move beyond one-off wins and start building a scalable, data-driven system for predictable and profitable growth.

Even with a solid framework, you're bound to run into some specific questions once you start digging into site conversion optimization. Let's walk through a few of the most common hurdles teams hit when trying to turn their data into real, measurable growth.

One of the first questions that always comes up is about A/B testing. How long do you actually need to run a test to trust the results? The answer isn't a simple number of days, but it's all about hitting statistical significance.

Forget about a fixed timeframe like "one week." The right duration depends entirely on your website traffic and the conversion rate of whatever goal you're tracking. A high-traffic homepage might get you a confident result in just a few days, but a lower-traffic page could easily take a few weeks.

The golden rule here is to collect enough data. You need to run the test until you have a large enough sample size for each variation to feel confident in the results—the industry standard is at least 95% statistical significance.

Never, ever stop a test just because one version jumps ahead early. Those initial leads are often just random noise. Let your test run for at least one full business cycle (usually one to two weeks) to smooth out any differences in user behavior between weekdays and weekends.

Before you even launch, use an A/B test calculator to figure out the sample size you'll need. This keeps you from making emotional decisions and ensures you're basing your choices on real user behavior, not a lucky streak.

This is the classic "it depends" question, because there's no magic number that works for everyone. A "good" conversion rate is completely relative to your industry, offer, and traffic source.

For instance, a simple email signup form might pull in conversions at 10% or higher. But for an e-commerce store, the average checkout conversion rate is often closer to 2-3%.

Your real benchmark should always be your own historical data. The whole point of site conversion optimization is to continuously beat your own baseline. Pushing your conversion rate from 2% to 3% might not sound like a lot, but it’s a massive 50% increase in conversions from the traffic you already have. Focus on improving against yourself.

For a deeper look at the core principles behind this process, it's worth exploring dedicated resources on Conversion Rate Optimization (CRO).

Technically, you can make manual changes to your website and see what happens. But if you're serious about optimization, trying to do it without the right software is like flying blind. You need specialized tools for accurate measurement, controlled testing, and deep analysis.

Here’s the essential toolkit for anyone getting serious about CRO:

These tools aren't just nice-to-haves; they provide the infrastructure you need to diagnose problems, test your ideas scientifically, and measure the true impact on your bottom line. If you're looking for more specific strategies, our guide on how to optimize landing pages is a great place to start.

Ready to build a rock-solid data foundation and scale your wins? Cometly provides the accurate attribution and conversion sync tools you need to optimize with confidence. See how it works at https://www.cometly.com.

Learn how Cometly can help you pinpoint channels driving revenue.

.svg)

Network with the top performance marketers in the industry